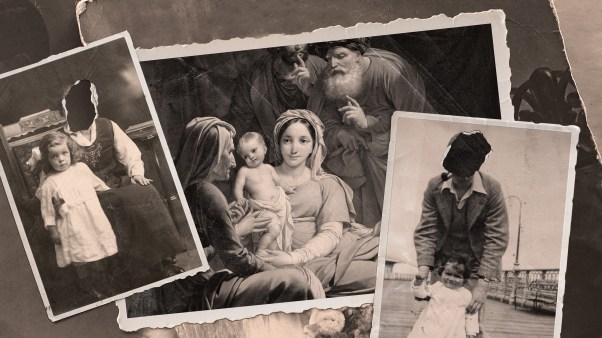

Christians believe that people—unlike robots—are made in the image of God. But shows like Westworld makes us wonder: In whose image are robots made?

The answer seems to be “ourselves.” As research into artificial intelligence continues, we will continue on the path of making artificial intelligence (AI) in our image. But can Christian thought provide an alternative approach to how robots are made?

The original Westworld, which starred Yul Brynner as the Gunslinger, was a product of the 1970s—a time when an intelligent robot was still a far-off possibility. At the time, filmmakers and audiences treated these robots instrumentally; there was little sympathy for the robot dead.

Times, however, have changed. Christopher Orr, writing in The Atlantic, notes that there is a major philosophical shift in the newest version of Westworld: A shift from concern for the creators, made of flesh and blood, to concern for the created, made of steel and silicon.

As Orr points out, storytellers from books and film have wondered about intelligent robots for at least a century. What is changing now, though, Orr notes, is the perspective: The former instrumental view of artificial intelligence is being replaced with a much more personal view. Like the iPhone-esque tricorder first popularized by James T. Kirk in 1967, the intelligent robot—predicated on rapid advancement in AI—is no longer a figment of scientific imagination, but a developing reality poised to take center stage in our rapidly changing world. Indeed, just this month, the European Union began discussing the establishment of “electronic personhood” to ensure rights and responsibilities for the smartest AI.

Exactly what this implies legally will be controversial—but the one part of AI that is understood by all is the “artificial” part. AI is clearly “artificial” in the sense that it will always be created by human artifice, and not through natural processes or supernatural agency. We may object to the way our Creator made us and our world (at least by virtue of its current, imperfect state), but as we inch closer toward some hoped-for singularity, we may find that being a creator ourselves is much more difficult than we realize. AI won’t be the first test for humanity, but it may prove to be one of the biggest.

The Uneasy Ethics of Electronic Personhood

The philosopher Charles Taylor has popularized the idea that our identity—the “who we are” part of ourselves—is a relatively new development in the history of the world. One key aspect of this is what he calls “moral agency”—that is, the differentiation between what one should do and what one does. This moral agency is what allows people to be persons, as defined by their relationships to others.

Increasingly, however, we are designing creations that are beginning to have something at least resembling moral agency, from commonly used computer algorithms that predict what products we want to buy to the self-driving cars that make life-or-death decisions about when to stop and when to go. These machines’ moral agency may only be partial, but it nonetheless raises questions about how we ought to act toward them.

Admittedly, though, this tension isn’t new. Way back in 1994, when computers were large, square, beige, and decidedly non-people-like, studies like those by Nass, Steuer, and Tauber found that people acted socially toward computers through a number of different tests. This was before computers could really talk back—as in, for instance, the Spike Jonze movie Her, where in the not-too-distant future a sad and lonely letter writer falls in love with his computer’s AI operating system.

Of course, our social behavior toward computers doesn’t necessarily imply personhood. Seventeen years later, though, a 2011 study found that a small percentage of college students were willing to attach personhood to robots—though this connection was made much more frequently by atheistic students than theistic students. (Not surprising, most students in the study strongly tied the words “human” and “fetus” to the word “person.” Many students also tied the words “dead,” “angels,” “bear,” and “dog” to the word “person,” as well.)

As has often been the case with other issues, our changing perspectives on AI will likely be driven by culture. In the movie Chappie, for example, a military android becomes sentient and struggles to do what is right, and in the movie Ted 2, a magical bear goes to court to fight to become a “person.” In both cases, audiences are urged to agree that Chappie and Ted are people just like us.

These perspectives will also be driven by the inherent brokenness of our world. What if, one might wonder, we engage in questionable life pursuits in our youth that we turn away from in later life, only to have a future Siri push us back toward those rejected pursuits? What if a self-driving vehicle’s code instructs a vehicle to miss a jaywalking pedestrian, costing the lives of a family of four in an autonomous minivan? What then of the EU’s idea of “electronic personhood”?

In an effort to promote AI, corporations that sell and develop AI for home use will point to all the great things that AI can accomplish: mow our lawns, walk our dogs, cook our dinners, and make our beds. And it’s true—AI will make life easier. Easier, different—but not necessarily better.

Mere Morality Is Not Enough

Such complicated, uneasy relationships with AI are and will continue to be built on our flawed nature as creators. As sinful creatures, we cannot help but imbue our creations with flaws. There is a real danger that humans-as-creators will be selfish and amoral creators, fashioning intelligent designs that exist simply to serve our own interests and desires—or our own sense of right and wrong. The immorality we have wrought on our world will be magnified by AI.

This is not to say that we should not create—being made in the image of God, we want to be like God, so we naturally create as God created. But there may be a way to create better: Instead of looking to ourselves and creating in our own image, we can—and should—look to God’s original design. When we create, we can intentionally do so in accordance with the image of God, rather than ourselves.

The Obama White House report from last fall identifies “ethics” as “a necessary part of the solution” when coming to terms with AI. Significantly, though, the report doesn’t tell us much about how ethics will help us with AI—and, indeed, from a Christian perspective, a merely “ethical” approach to AI is not enough, just as a merely “ethical” approach to who we are is insufficient. Our morality may differentiate us from other animals in our world, but it is not who we are.

As Abraham van de Beek, professor emeritus at Vrije Universiteit Amsterdam, explains, “who we are” is not found in looking inward at ourselves but outward toward our Creator. This is because when we look inward, we only see an illusion of ourselves—an identity we created to cover over our true identity, which can only be discovered in relation to our Creator. Only when we deny ourselves can we know who we are.

AI and the Imageo Dei

There is an alternative to the dangers of creating in our own broken image. Designing creatures and caring for them requires more than just setting ethical parameters. We can take this cue from Scripture, where standards for living are an important but secondary part of what it means to be human. What makes us human is the divine design itself.

Think of it like this: When God designed people, he didn’t ask the question, “How can we make people to make our life better?” or “How can we make people to serve us?” He could have done that and been just in doing so—but instead, he chose to make people in his own image, with the ability to relate and love, not just receive commands. If we want to follow God’s creative example, perhaps we, too, should aim to fashion things with the ability to relate and love, and not just to receive commands.

Indeed, the uniting conflict in fictions such as Westworld, Ted 2, and Chappie is that people exploit robots because people create robots that are exploitable. Because we are made in the image of God, with the ability to choose or reject love, we are not inherently exploitable by God. We can only exploit ourselves.

This is not, of course, an argument for an unchecked or parameter-less AI; rather it is a reminder of Jesus’ example that sometimes the Creator must take the role of the servant for the good of the created. Jesus was willing to humble himself for our sake. He was willing to show love to his created. As we act as sub-creators ourselves, we can do the same.

Douglas Estes is an Assistant Professor of New Testament & Practical Theology and DMin Program Director at South University—Columbia, as well as the author/editor of many books on biblical scholarship and the church, including Questions and Rhetoric in the Greek New Testament (Zondervan, 2017). His focus is the intersection of text, church, and world.