In this series

Five months prior to the release of ChatGPT in November 2022, AI researcher and Google vice president Blaise Agüera y Arcas described in The Economist his conversation with Google’s LaMDA (Language Model for Dialog Applications), a precursor of later Gemini models. He wrote of the experience, “I felt the ground shift under my feet. I increasingly felt like I was talking to something intelligent.” Around a week later, Google engineer Blake Lemoine publicly alleged that LaMDA had become a sentient intelligence.

When we interact with an AI model, it can be easy to subtly ascribe some measure of natural intelligence to the system, even though none is present. As these models continue to be integrated into our technology and devices, how should we view AI systems, especially in the context of our own natural intelligence?

Natural intelligence is the God-given gift to understand and reason about reality, one another, and ourselves. By contrast, artificial intelligence is the subdiscipline of computer science concerned with building models to perform tasks often associated with natural intelligence, like solving a puzzle or summarizing a text. The gap between natural and artificial intelligence is sometimes portrayed as small but is in fact a wide chasm.

While some AI techniques are inspired by ideas in neuroscience and behavioral psychology, most models bear little resemblance to biological systems. Other AI methods draw from disciplines like signal processing, evolutionary biology, and Newtonian mechanics. For example, genetic algorithms are a class of optimization techniques inspired by evolutionary principles of natural selection, mutation, and speciation. AI researchers have remarked that “biological plausibility is a guide, not a strict requirement” for designing AI models. While a task may appear to require the biological machinery of natural intelligence, an AI model need not emulate this machinery to be successful.

Natural and artificial intelligence are not effectively interchangeable. Believing they are equivalent is an affront to those possessing natural intelligence and a disservice to those developing artificial intelligence.

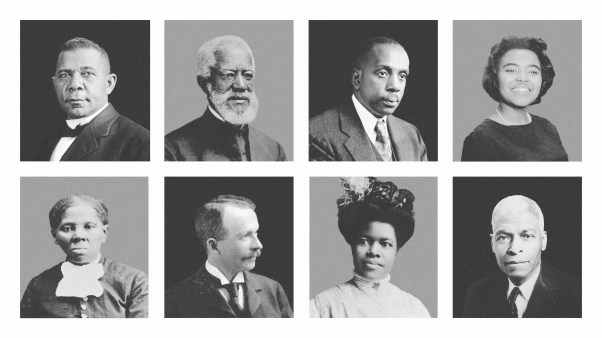

Measuring natural intelligence is different from quantifying the performance of an AI model. Psychologists have long known that natural intelligence cannot be condensed to a single score, such as IQ. Many theories made to quantify natural intelligence have troubling roots in pseudoscientific ideas like eugenics, phrenology, and social Darwinism. And many intelligence scores were designed to privilege certain individuals over others.

Still, it’s challenging to measure natural intelligence, especially when including nonhuman intelligences. Evaluating the performance of an AI model at a specific task is comparatively straightforward: We query a model with a set of inputs and compare the outputs with our expectations. A growing number of benchmarks for large language models seek to quantify performance on tasks ranging from passing the bar exam to accurately translating texts to making moral decisions.

As AI models continue to improve according to industry-established benchmarks, we should learn from our mistakes when quantifying natural intelligence. Scoring the intelligence of participants with a single number can be dangerously reductive, regardless of whether the comparison is between two people or between two models.

Our need to measure the intelligence of our models and ourselves reflects how valuable (socially and monetarily) we consider intelligence. At least one open letter written by the Future of Life Institute and signed by many AI experts contained the same notable phrase: “Everything that civilization has to offer is a product of human intelligence.”

Prioritizing intelligence as the only source of progress discounts other God-given traits like creativity and wisdom. Idolizing intelligence dismisses long-held Christian attributes like piety, humility, and self-sacrifice. Our societal worship of intelligence amid powerful AI models has led many to fear their own imminent devaluation. The science fiction stories we tell about a hypothetical artificial general intelligence (AGI)—wherein a superintelligent machine subjugates those it deems intellectually inferior—tend to mirror our own history. Our colonizing predecessors have readily done so in the past.

Christians can forge a path between the extremes of idolizing and repudiating intelligence. We know that we are required to “act justly, love mercy, and walk humbly with [our] God” (Mic. 6:8). Intelligence alone is insufficient to carry out God’s will for our lives. We are called to “not conform to the patterns of this world, but be transformed by the renewing of [our] mind” (Rom. 12:2). So let us willingly surrender our natural intelligence to God for him to use and mold.

As for artificial intelligence, we should not confuse the tools we build with the minds we are granted. Let us instead wield all the tools given to us to further God’s kingdom.

Marcus Schwarting is the senior editor at AI and Faith. He is also a researcher applying artificial intelligence to problems in chemistry and materials science.