Author Nicholas Carr joined The Russell Moore Show to talk about how technologies that promise to connect us are instead damaging our relationships and our ability to make sense of the world. This excerpt from their conversation appeared first in print. Listen to the entire episode after July 9. This interview was edited for clarity and length.

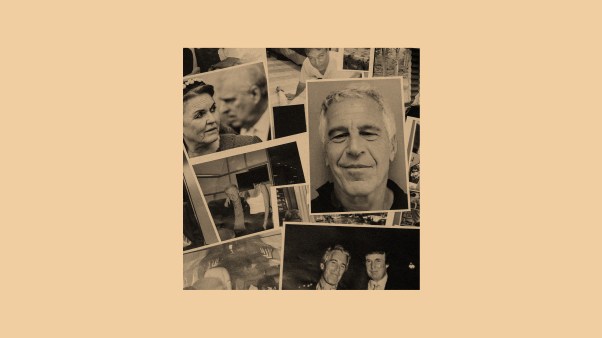

Illustration by Ronan Lynam

Illustration by Ronan LynamRussell Moore: A lot of people think of technologies (such as AI and social media) merely as tools. One of the arguments that you make in your book Superbloom is that the way we use these technologies changes our experience of the world.

Nicholas Carr: One of the big points that I try to make in the book is that human beings grew up in a physical world, in a material world. We are profoundly ill-suited to living our lives, particularly our social lives, online.

We thought that being able to communicate with a much broader set of people more quickly in much greater volume would expand our horizons, would give us more social context, a deeper understanding of each other.

What we’ve seen is that we are overwhelmed by the communication that we thought would liberate us. And it turns out that a lot of our social identity hinges on being in physical places with groups of people. That doesn’t mean we can’t extend that with telephone calls and letter-writing and everything. But human beings are very much dependent on being together in the physical world.

Until social media came along, you’d go out and you’d be with one set of people. Maybe it would be your classmates in school, or your coworkers at work, or your family. Maybe it would be a group of friends going out to a restaurant. You’d socialize there and you’d learn about one another. And then you’d separate, and there would be time when you were by yourself. You could think back over what just happened and about your relationships. You would have downtime, in which you could organize your thoughts, question yourself, and relax for a little while—because there is a stress involved in socializing. And then you would go on to another place at another time with another group of people. And it’s through these physical interactions that we expand our empathetic connection to other people.

RM: That makes me think of the ideal paradigm for church life that we have had over many generations: A group of people who are gathered together some of the time and then are doing their own spiritual work apart from that, and then gathering back together for acts of service or mission.

NC: And it’s both the being together and the being apart that’s important. When you transfer social life onto the internet and you interact with people through screens, then the rhythm of your life, the tempo of your life, is completely different. You can socialize all the time because the social world is there in the form of your phone, which we’ve trained ourselves to carry all the time.

It’s not just one set of people you’re interacting with. It’s everyone. It’s people you go to school with, people you work with, your parents, your children, your friends, anonymous crowds of commenters online, and so forth. All the socio-temporal divisions, the space and time divisions that are inherent to living and socializing in the real world, in the physical world, are simply decimated.

A lot of the antisocial behavior, the rudeness, the polarization of views, and the shunning of other people that we see online comes from the fact that our lives have lost their connection to space and time.

RM: One of the things that I’ve noticed for some time now in evangelical Christianity is a group of young men who don’t seem to aspire to be preachers or pastors or even scholars in the way that previous generations would have aspired to those things. They want to be “edgelords” on the internet. One of the ways to do that is to post something really shocking in the hopes that people will react to it. And you can see these young men become more extreme, sometimes to the point of Nazification.

I thought about that phenomenon as I was reading your book. You quote sociologist Sherry Turkle about this digital way of life as an “anti-empathy machine.” And what we have seen is that empathy itself is viewed as a sin, a fake virtue. What does technology do to our ability to have empathy?

NC: One of the great strengths of human beings is how adaptable we are. We can adapt to different situations very well. But adaptation doesn’t necessarily make you better. You can adapt to an environment in a way that makes you less empathetic, less sympathetic, angrier. When you have people saying that empathy is an enemy, I think that’s a manifestation of how people adapt to the online environment. Empathy gets in the way of promoting yourself, getting attention, being an edgelord.

One thing Turkle pointed out is that empathy is a complex emotion, unlike anger and fear, which are primal emotions that come from lower down in the brain and are triggered immediately. Empathy is something you learn how to feel, and it requires attentiveness to other people—trying to get inside their heads and understand them. One thing that online life steals from us is attention. Because we’re constantly overloaded with new messages, new information, we simply don’t have time to back away from the flow and say, “Let me think about whether this is important. Let me just pay attention to this person.”

When you’re constantly distracted, constantly shifting your attention rather than focusing it, not only do your thoughts become more shallow—because deep intellectual thinking requires concentration and focus—and not only does it affect your intellect. I think it affects your emotional capabilities too. You start to lose these deep, difficult, complex emotions that take time and attentiveness, and you revert to instinctive emotions like anger, fear, and belligerence. We see a lot of that online, and it is very concerning that people start to say, “Well, empathy wasn’t important anyway.” People are expressing the fact that they have destroyed their ability to experience empathy, so they say it’s not important anymore.

We see this on the intellectual side too, where people say, “I don’t need to read books anymore. I don’t need to focus on one thing for a long time. That’s just a waste of time. I need to process information as quickly as possible.” In adapting to our new environment, we start to take on the qualities of that environment.

RM: It makes me think of the way Jesus taught with parables in the Gospels. There was this sense of getting people to a point of perplexity: What does the father do when the son who has insulted him comes back home? Who was the neighbor to the person beaten by the side of the road? It’s almost required that we think this through and feel this through. And then Jesus turns it around and flips it on the person who’s hearing and reveals that it’s a completely different way of thinking.

I’m finding more young Christian students and others who really want to work on their spiritual development but say they don’t know how to read a text. They don’t know how to get lost in the Gospel of Mark or in the Book of Jeremiah. That’s the time we’re in, right?

NC: That’s absolutely right. Just as it takes time to learn how to feel complex emotions like empathy, you have to learn how to pay attention. This is one of the most important things in childhood education, because kids are naturally distractible. And so are adults. Being able to focus your mind on something important—maybe it’s what you’re reading, a conversation you’re having, or a work of art—is something that has to be learned and practiced.

We are not teaching kids the skills of managing their own minds. Instead, we give technology sway over our attention. We say that whatever comes up next on the phone is what I’m going to pay attention to. We’re not training kids how to manage their own conscious minds, which is essential to choosing what you want to do at any given moment. As adults, we’re losing that ability as well. We’re letting the technology make critical choices about what we think about rather than making those choices ourselves.

RM: I have found myself in some version of the following conversation countless times over the past several years: A group of religious leaders will say, “Look, Martin Luther used the printing press, and the Reformation exploded. Billy Graham used the newly emerging technology of radio and television. And if we’re going to engage with the generation to come, churches have to be able to do that via artificial intelligence.” But I haven’t yet found anybody who is doing that in a way that’s compelling to me.

A Roman Catholic group created an AI priest, Father Justin, who ended up being defrocked, even though he’s not real, because he became a heretic pretty quickly. What they assumed would be a delivery system that could help people with counsel and advice from their religious point of view ended up suggesting that baptisms can happen with Gatorade. You’re dealing with a technology that isn’t just a communications tool.

NC: Yeah, communication technology used to be just a transmission technology—people on one end, whether it was a telephone line or a broadcasting system, creating some kind of content and getting it to other people at the other end. It was just a transportation network for information. With Facebook and its algorithms and YouTube and TikTok with their algorithms, suddenly the machinery takes on an editorial function. It starts choosing what content to show or not show to people.

With AI, the machinery is going to take on what we long saw as the fundamental role of human beings in media systems, which is creating the content. So suddenly you have the network—the machinery—creating the content, performing the editorial function of choosing which pieces of content which people will see and then also being the transportation network.

When this happens, you have to start to wonder about the motivations of the people in the companies operating the networks, because suddenly they’re in a position of enormous power over everyone who is going onto these systems to socialize, to find information, to be entertained, to read, or to worship. We’re entering a world that we’re completely unprepared for and haven’t really thought about, because we’ve always assumed that ultimately it’s people who are creating the information that we pay attention to. With AI, that assumption goes out the window.

One of the dangerous possibilities is saying, “Well, it’s okay, we should just go with the flow, because at least AI can give us what we want really quickly and efficiently.”

So why sit down and struggle with writing a toast for my child at his or her wedding? Why sit down and write a sermon from scratch? Why write a letter to a friend when you can just plug the desired outcome into a machine and it’ll pump out adequate material very, very quickly?

I think that’s extremely tempting in many different areas. But in the end, what it steals from us is our own ability to make sense of the world and express understanding of the world. The ultimate effect is this flattening of humanity.

RM: One of the things that people will often point to as an upside of chatbots, for instance, is that people are sometimes reluctant to tell another person the truth when it comes to their mental or physical health. There have been studies that have shown a person will reveal how much he or she is drinking more accurately to a chatbot than they will to their doctor.

NC: I’ve been emphasizing the negative consequences of an overdependence on technology. That doesn’t mean that the technology can’t be useful when applied to a specific problem. If you have algorithms that look at medical x-rays, they can sometimes spot things that human radiologists can’t. What you need is both a deeply informed human doctor to look at an x-ray and the assistance of a digital algorithm. They can work together.

The dangerous thing is if hospitals, or the companies that run hospitals and are looking to cut costs, simply say, “We can get rid of the doctor because doctors are expensive. And the machine’s pretty good at this.”

This is what can happen in all sorts of areas. It may well be that a chatbot can play a role in therapy or in medical situations in some ways, but I think you still need the deeply trained and deeply experienced professional to play the central role. The danger is to say, “The machine’s good enough, and it’s much cheaper and less time consuming than actually having human interaction. So we’ll just go with the machine.”

If you look at where American society stands today, it’s pretty clear that we have a crisis of loneliness. I’m not saying that computers and smartphones and social media are the only cause of that. I think it’s a very complex phenomenon that’s been going on for quite a long time. But this idea that you can socialize simply by staring into a screen ultimately leads to a mirage of socializing that actually leaves people lonely.

Nicholas Carr is a best-selling author who writes about the human consequences of technology.

Russell Moore is editor in chief at Christianity Today and the director of the Public Theology Project.