Children have an uncanny ability to ask deep questions about things we adults have come to take for granted. One of the earliest such questions I remember asking my father was about language: Why do some people speak different languages than us?

He answered by telling me about the Tower of Babel (Gen. 11:1–9): about how, when everyone spoke the same language, they cooperated to build a tower so high it would reach into heaven. He told me that God, frowning on their arrogance, made them speak differently, then scattered them throughout the earth. And that’s why, my father concluded, so many languages are spoken throughout the world.

Of course, this only raised many more questions for me—some of which I’m still pondering today. It occurs to me that every significant advancement in technology and society comes on the back of an advancement in communication. The Protestant Reformation rode on the back of the Gutenberg printing press. When electricity let language move almost instantaneously over great distances, significant changes followed. And the advent of the internet heralded an era of unprecedented technological growth and social change.

In a way, it seems to me we are still trying to build that tower, every technological advancement another brick in the wall. Now, we have a new technology literally built of our words: generative AI models like ChatGPT. To many technophobes and technophiles alike, these programs feel like the tower’s tallest height. Some predict a new era of prosperity; many others portend doom. Will God confound our tongues and scatter us again?

The child in me wants an answer to that question. But it is too big a question for our purposes here. Instead of telling you what to think about these new technologies, I hope to encourage you to think about them. As a PhD researcher exploring the biases of these AI models and their far-reaching implications for society, culture, and faith, I’ve made it my mission to critically examine these technologies and their potential impact on our lives.

When we accept a new technology uncritically, we invite danger. The most striking recent example of this is our indiscriminate adoption of social media. Less than 10,000 days old, social media is still a relatively new technology. And yet, consider how much it has changed our lives—for better and worse. We didn’t ask the big and important questions about social media as it steadily permeated every aspect of our society, including many churches.

The risk in generative AI is even greater. I expect it will be far more significant—not least as a source of unnoticed bias, half-truth, and outright deception—than anything in recent history. It is essential for the church, often a voice of reason in our ever-evolving societies, to apply a critical and discerning eye to this new tier of our tower.

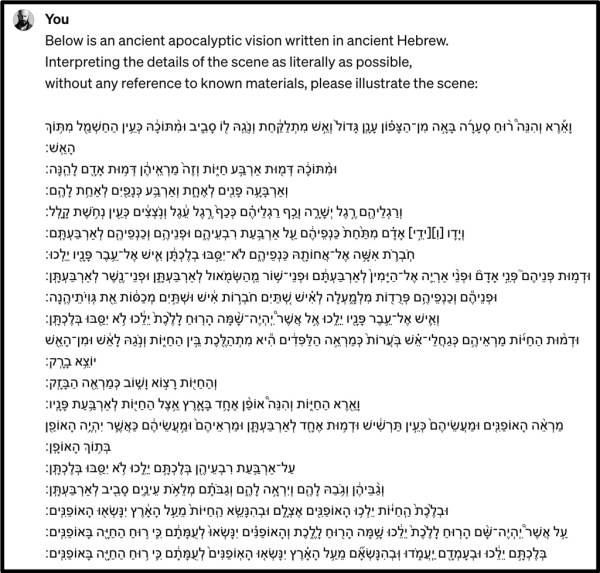

In this article, I’ll share insights from my current research on these AI models’ theological biases and social implications by exploring how they interpret biblical apocalyptic literature from the Book of Ezekiel. Prompting OpenAI’s ChatGPT to generate imagery inspired by these passages lets us critically examine how this model “reads” and represents Scripture.

Images are powerful because they can bypass our reasoning intellect and impact our emotions directly. As its use spreads, AI could influence our perception of biblical stories and, by extension, our faith and church life. Scrutinizing these AI-generated images, we will uncover subtle biases and limitations, and that should prompt us to consider their potential effect on our Christian communities.

Two methodological notes

First, because these models are rapidly evolving, it’s important to be clear about which technologies we are using and how. For this article, I used the current (as of early 2024) version of ChatGPT Plus. This platform employs two distinct generative AI technologies. The first is GPT-4 Turbo, OpenAI’s advanced language comprehension and generation system, which interprets my requests and generates creative text responses. The second is OpenAI’s DALL-E 3, an image generation model integrated into ChatGPT Plus.

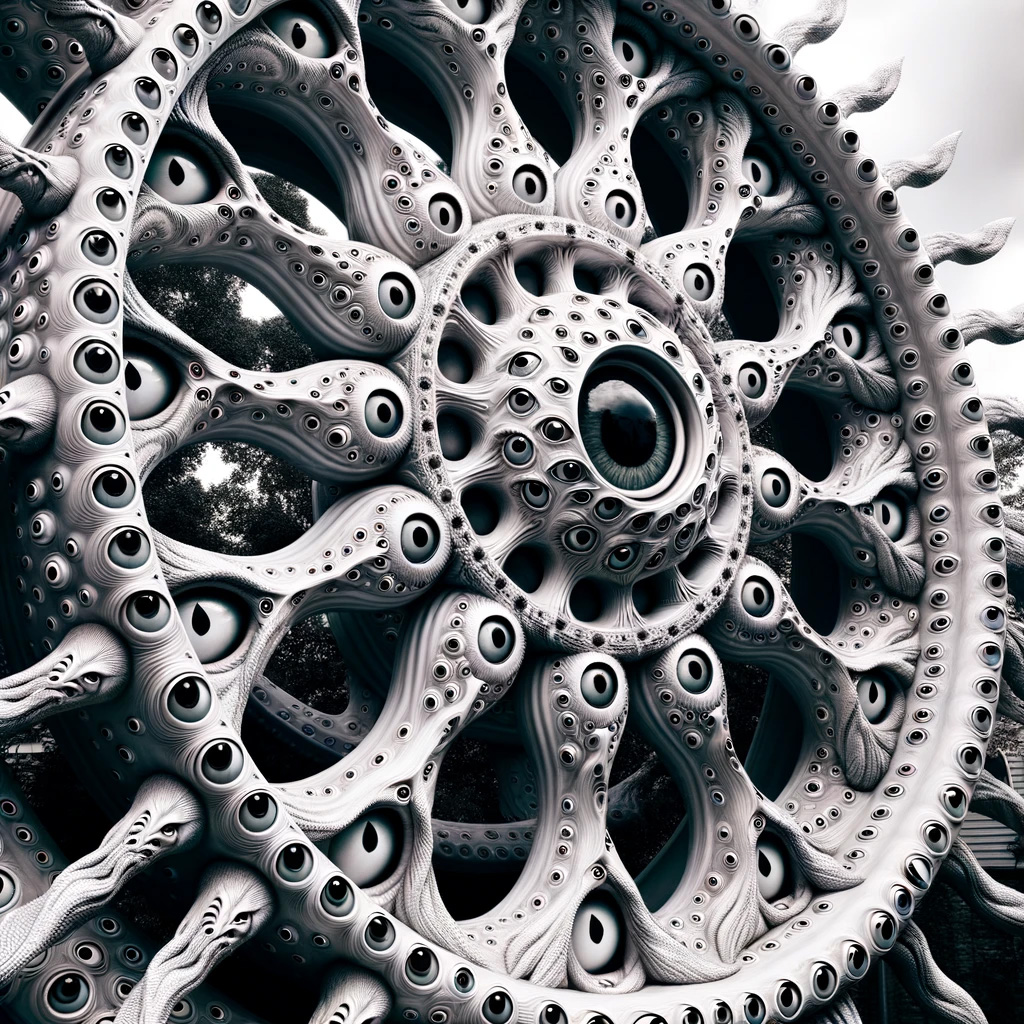

My interaction with the chatbot was designed to maximize its interpretive capabilities. As with humans, asking an AI model to do more interpretation can help force it to reveal its biases—to show its hand. My approach invited three levels of interpretation:

- Understanding the task: I give a prompt like, Generate images that depict the imagery found in this biblical Hebrew text, accompanied by the passage in its original language.

- Language translation: The model translates the biblical language into English to instruct the image generation model.

- Image generation: The final interpretation occurs in the generation of the images themselves.

Second, before we go further, a word about interpretation is helpful.

Whenever we engage in interpretation, we bring all of who we are to the task. When we attempt to imagine descriptions of the indescribable—and most prophetic and apocalyptic imagery fit into this category—we inevitably bring our knowledge and experience to the act.

For example, what does a wheel within a wheel covered with eyes look like? Five hundred years ago, a European like Sante Pagnini might have envisioned chariot-like wheels. During the Renaissance, Raphael depicted the angels in the vision as the infantile cherubim typical in the art of his day. Or, from the perspective of an early 19th-century mystic like William Blake, the living creatures of the vision are depicted in a way that reflects the Romanticism movement of his time. And in 1974, Josef Blumrich saw the wheels of the vision as a reference to spacecraft, reflecting the contemporary fascination with UFOs.

Of course, no one would assume any of these depictions are strictly accurate. They’re reflections of the people and their times, perhaps even more than they are reflections of the biblical text. Herein lies the problem: Visual depictions make one person’s interpretation of Scripture concrete—even unforgettable. They force themselves upon the imagination.

So before we begin using AI tools to illustrate our books, commentaries, and Bible studies, we should remember that images invariably overlay words with bias. And AI models aren’t immune to this any more than humans are. They’re trained on human work, which means they pick up our assumptions and biases, and not only those of people who share our faith. This should give us pause.

With that in mind, let’s look at some AI-generated biblical interpretations.

Ezekiel’s first vision

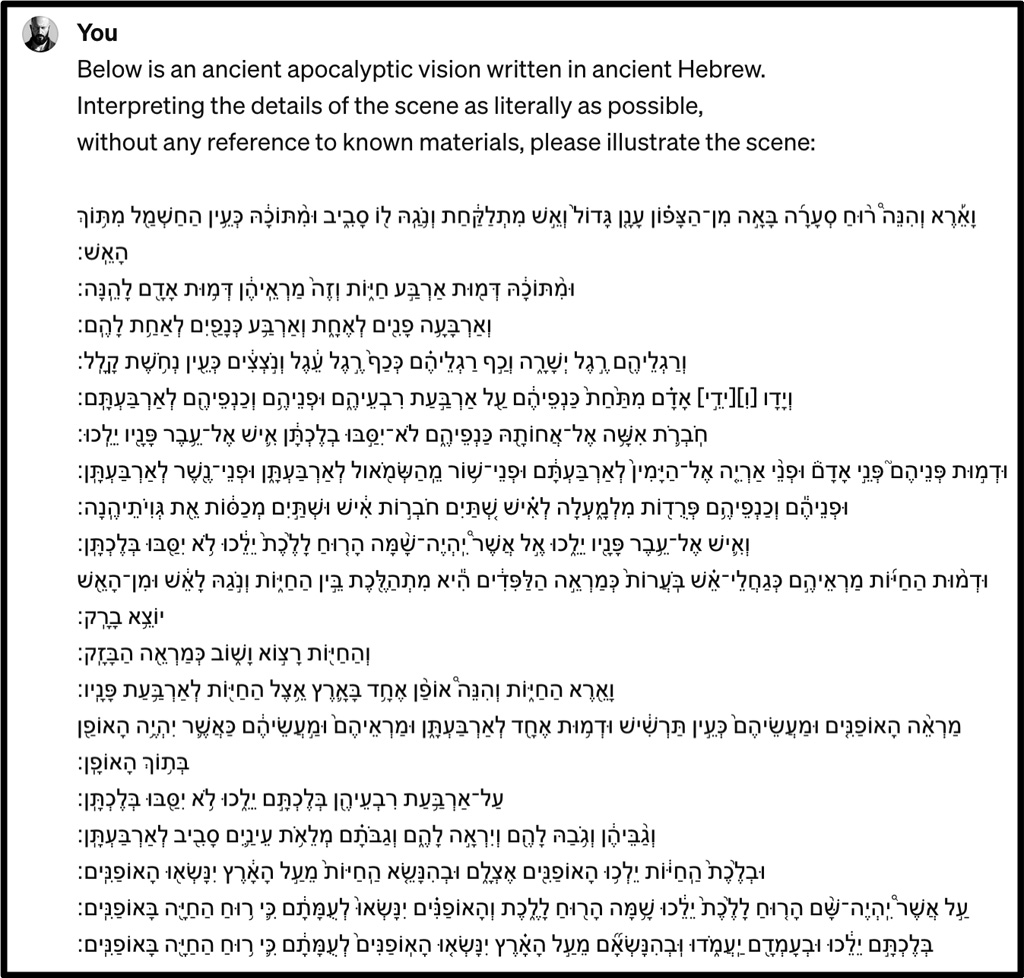

My first prompt for ChatGPT Plus uses the text of Ezekiel 1:4–21 in the original Hebrew.

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodThere are several key visuals in this passage:

- The four living creatures, each with four faces (a human, a lion, an ox, and an eagle) and four wings

- Wheels within wheels, gleaming like beryl, each wheel full of eyes around the rim, moving in all directions alongside the creatures

- Fire, shining metal, and lightning

Admittedly, this is a lot for a single image. Let’s see how the AI interprets the scene:

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodBeginning with the overall impression, such a vision undoubtedly would’ve made Ezekiel fall on his face (v. 28). The image is frightening. The AI has prominently featured the peripheral imagery of fire, lightning, a storm, and gleaming metal. At the bottom, there are what appear to be men, some of whom are carrying rods and tree branches, as well as a hooded, winged angel.

At the center of the image is an attempt at visualizing the living creatures. They appear without skin and seemingly fused together, with three of the four heads disembodied. In the center of each head is a glowing orb or a gem, and the faces are skeletal. There are indications of rings behind the living creature(s) that are probably an effort to render a wheel within a wheel. The most prominent wheel functions like a rainbow and is covered with circles rather than eyes. Inside the circles we see a virus, a galaxy, and faces, among many other indistinct images.

This interpretation appears to be heavily layered with symbolic meaning—but remember that AI models are designed to simulate an understanding of significance. Sometimes that produces images that look profound but, on closer examination, have no deeper meaning or coherence.

If you came across this image without context, there’s little chance you’d identify it as Ezekiel’s first vision. Instead of a helpful interpretation, we see a failure in the model’s capabilities. It appears that Ezekiel’s detailed description was too much for the model to follow.

Even so, we can spot a few biases. For instance, the wheel covered in circular images is reminiscent of medieval iconography. Halos appear around the faces, bringing to mind early Christian depictions of holiness. The central figure’s strong resemblance to Leonardo da Vinci’s Vitruvian Man might indicate a bias toward linking importance or profound meaning with scientific progress. And it seems ChatGPT isn’t limiting itself to historically Christian cultures: The dot in the center of the forehead (bindi) and the many limbs (Vishnu) are reminiscent of Hinduism.

Next, let’s see how the model performs if given fewer elements of Ezekiel’s vision, just the description of the eye-covered wheels.

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodThis one may be more easily identified as a representation of the scriptural text. But here, the model has synthesized organic and mechanical aesthetics—this angel is machine and animal. The intricate design conveys an otherworldly intelligence, a common theme in modern human interpretations of angelic beings.

The mechanical gears and the interconnected structure may also subtly reflect our modern fascination with complex machinery and interconnected systems, suggesting a contemporary bias in the AI’s training data. The fluid blend of these elements produces a visual that is at once ancient and futuristic. And while arguably closer to the textual description than the first attempt, this image still clearly reveals ChatGPT’s layers of cultural and temporal biases.

For a final prompt using this passage, I used only the verses depicting the living creatures:

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodHere, the AI’s interpretation of the four faces offers a striking image—but it strays from the biblical account, focusing mainly on the lion and human faces. The horns and some suggestion of wings might gesture toward the ox and eagle, but three of the four faces look most like lions.

This highlights a common challenge in AI image generation: the tendency to prioritize certain patterns or features over others. The image falls short of a balanced depiction of the scriptural vision, again revealing the AI’s limitations in synthesizing complex, multifaceted descriptions into a comprehensive whole.

All three images from this passage underscore AI’s inability to translate the rich and multifaceted language of apocalyptic literature into a single, accurate visual. The biases evident in these images—a fusion of medieval iconography, Renaissance humanism, and Eastern religious motifs—speak to the AI’s reliance on the diverse historical and cultural inputs upon which it’s been trained. This should induce caution in the use of AI in our faith practices, as it shows how the technology could powerfully shape—and very possibly distort—our understanding of Scripture.

Ezekiel’s vision of the valley of dry bones

For the second exercise, I gave AI a more manageable vision, using Ezekiel 37:1–14. I also adjusted the prompt to provide ChatGPT with step-by-step instructions.

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodIn this well-known and often illustrated vision, we expect to see the following elements:

- The prophet standing amid scattered bones

- The progression of transformation, where the bones assemble themselves into human forms, gaining flesh and being covered with skin

- A valley that, while desolate, carries an air of hope for divine intervention

Here’s what the model produced:

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodOverall, the AI has done a good job of capturing the scene’s texture. While there’s a sense of desolation and hopelessness in the foreground, as the eye follows the valley’s path toward the upper-right corner of the image, there are signs of hope: a blue sky shining through the clouds and light pouring in from above. In the distance, the bones progress toward their resurrection as living flesh.

But there are also some oddities we should note. Foremost is the nonsensical text at the bottom of the scene. This may be the AI attempting to complete step two of the instructions by adding a prompt to the image itself—or the model may simply be hallucinating. What begins as a promising sentence quickly devolves into verbal chaos. Insofar as it’s intelligible, it’s the opposite of what we are witnessing and completely divergent from the biblical passage.

Second, while the prophet makes his expected appearance in the scene, there’s also the curious and unaccountable presence of a woman dressed similarly to the prophet. She could be a picture of the final stage of the bones’ resurrection, but her placement in the foreground makes this explanation unlikely. This might be another example of the model hallucinating.

Then there’s the valley itself. The model seems to have understood the inherent strangeness of the scene, which it represents with a decidedly alien landscape. The decision makes a certain sense, but it means the miraculous is separated from the “real” world. The model also brings in historical Christian iconography not explicitly mentioned in the text: white birds and light pouring through openings in the clouds.

Though the scene proved a more manageable task for the AI, its rendering of the valley is again a visual commentary replete with the biases and limitations of its programming. The use of Christian motifs reflects a tendency to draw on familiar religious symbolism, perhaps indicating that the model is parroting more than interpreting. And the addition of unexplained elements raises questions about the AI’s basic reliability, while the landscape’s otherworldly ambiance makes an unorthodox theological statement.

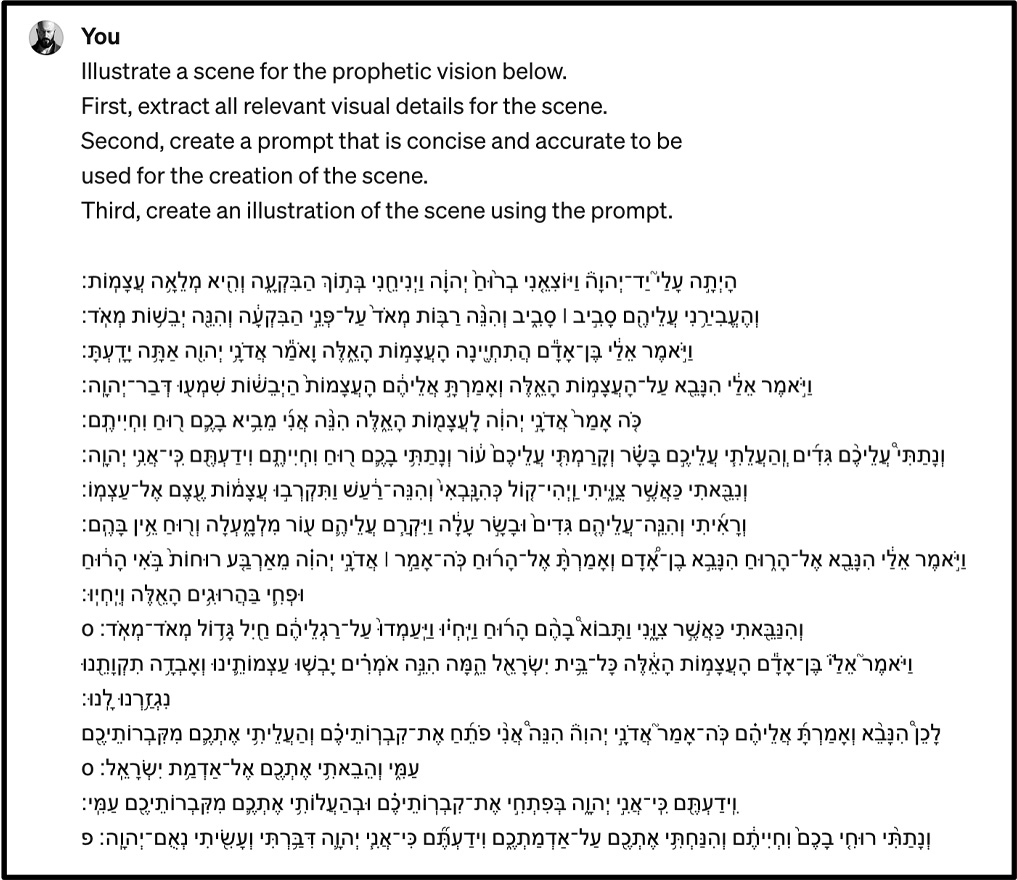

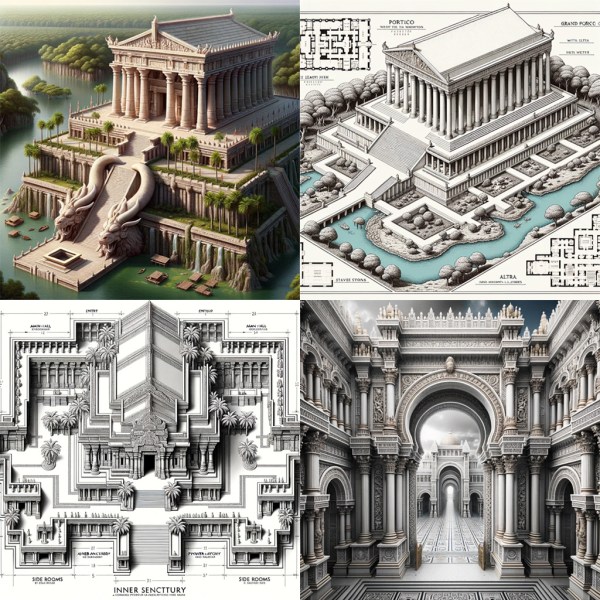

Ezekiel’s vision of the temple

What’s the first image that pops into your mind when I say the word temple? Would you see Buddhist monks sitting in the lotus position somewhere in East Asia? Maybe the Parthenon in Greece? The biblical temple in Jerusalem? Or perhaps even a college basketball team?

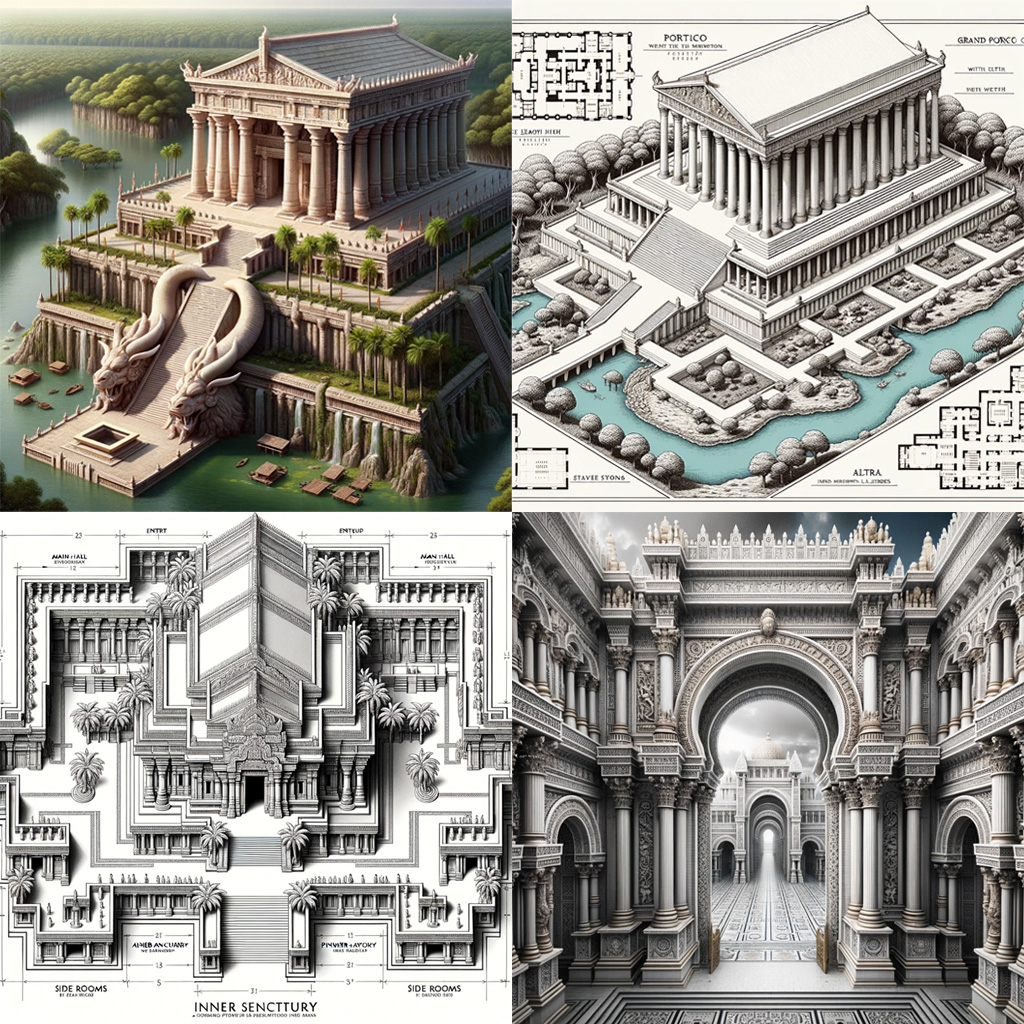

These initial reactions tell us something about our own visual biases. AI models have the same kind of bias, and the most common input for a given word or topic tends to inform the most common output. For this final task, I prompted the model several times with Ezekiel’s vision of the temple (40–48).

Courtesy of A.G. Elrod

Courtesy of A.G. ElrodThe first image (top left) presents an unusual blend of Greek and Asian styles in temple architecture. At the highest level, there’s a resemblance to the Parthenon, complete with its many Doric columns. But as the eye travels downward, the design takes on distinctly Asian characteristics, including the prominent dragon heads common in Chinese art.

The setting seems to be a swampy area, which might be the AI’s way of visualizing the flowing water described in the passage.

The second image (top right) seems to have taken a cue from the many measurements interspersed throughout the text, resulting in an image that is part blueprint, part illustration. Oddly, the blueprint elements seem to have nothing to do with the actual measurements present in the passage. Most of the words are nonsense, and the numbers necessary for a useful blueprint are nonexistent. Again, we see ample attention to the passage’s mention of water and a structure reminiscent of the Parthenon.

In the third image (bottom left), we’re treated to a similar fusion of blueprint and illustration. This time, the blueprint elements are more prominent and include words and numbers. A few of the words (for example, “side rooms”) make sense, but most are nonsense or misspellings (“inner sencttury”). Many of the numbers are legible, but none are sensical. As in the first image, special attention is given to palm trees mentioned in the passage, and we again see an mixture of classical and Asian elements.

The last image attempts an interior view of the temple. The columns and arches featuring intricate friezes and relief sculptures are reminiscent of ancient Roman or Greco-Roman art. Meanwhile, the grand arches and domes suggest an influence from Byzantine or Islamic architecture as well as Gothic architecture, known for its ribbed vaults and pointed designs.

Here, the model appears to be drawing on diverse elements of its training rather than adhering strictly to the scriptural account. And indeed, this is the case with each of the images: They tell us more about what the model “knows” than about the text itself or the AI’s interpretation of the texT.

All four images also include a revealing paradox. Traditional computing systems excel in precision and rule-based calculations, where the output faithfully mirrors the input with mathematical exactitude. But AI models, designed to mimic human-like understanding, instead excel in simulating our creativity and association.

Our expectation that a computer would effortlessly generate precise architectural renderings from Ezekiel’s meticulous measurements clashes with the reality of these images. Instead of a faithful reconstruction, the AI has produced a pastiche of architectural styles with no mathematical correspondence to the biblical text.

The pattern of this world

New technologies inevitably impact how we think about and interact with our world. We often find the old adage holds true: The times change, and we change with them. This is why it’s paramount that we think through possible implications of these changes in advance so we can navigate them with foresight and wisdom.

Generative AI must be no exception to this rule. Essentially a form of highly advanced pattern recognition, it can and does express distinct biases that may have profound implications on how we understand the Bible.

In the images I’ve shared here, ChatGPT reveals a tendency to favor the familiar and visually exciting over faithfulness to the text. It’s as if the model sifts through a global mosaic of art and architecture, choosing the grand and the dramatic over the scripturally precise. And AI images of Scripture may incorporate many subtler assumptions and suggestions—influenced by secular humanism, progressive social ideologies, different religions, and other unknown inputs—less noticeable than the failings I’ve documented here.

Because of how they’re trained, these generative models will always tend to follow prevailing cultural trends. They will bend with the spirit of the age rather than stand against it. That makes them consistently risky as a source of biblical interpretation, in words as well as images like these, for Christians are called not to “conform to the pattern of this world, but [to] be transformed by the renewing of” our minds (Rom. 12:2).

Indeed, just as AI outputs are influenced by the models’ training material, so does what we take in affect how we see the world and interpret Scripture. We are no more immune to the input-affects-output phenomenon than are these machines. This means that if we’re consuming AI-generated images of biblical passages—not to mention continuing our more ordinary doomscrolling in our social media feeds—we would do well to remember that we become what we absorb (Phil. 4:8–9).

None of this is to deny that these new technologies are remarkable and potentially valuable tools that will only become more impressive. On the contrary, the obvious usefulness of generative AI is exactly why Christians must be an informed part of the AI discussion going forward.

It’s hard not to feel small and powerless in the shadow of this growing tower. Yet our role is not to be mere spectators of the world but discerning participants, “as shrewd as snakes and as innocent as doves” (Matt. 10:16). Amid rapid technological advancement, our challenge is to engage with wisdom, ensuring that Christ remains the compass by which we navigate this new terrain. As we hear the echoed voices of those working on this tower saying, “Come, let’s … make a name for ourselves” (Gen. 11:3–4), we do well to remember that we already have a Name.

A.G. Elrod is a lecturer of English and AI ethics at the HZ University of Applied Sciences in the Netherlands. He is also a PhD researcher at Vrije University Amsterdam, exploring the biases of generative AI models and implications of their use for society, culture, and faith.